- I recommend the Pixel 9 to most people looking to upgrade - especially while it's $250 off

- Google's viral research assistant just got its own app - here's how it can help you

- Sony will give you a free 55-inch 4K TV right now - but this is the last day to qualify

- I've used virtually every Linux distro, but this one has a fresh perspective

- The 7 gadgets I never travel without (and why they make such a big difference)

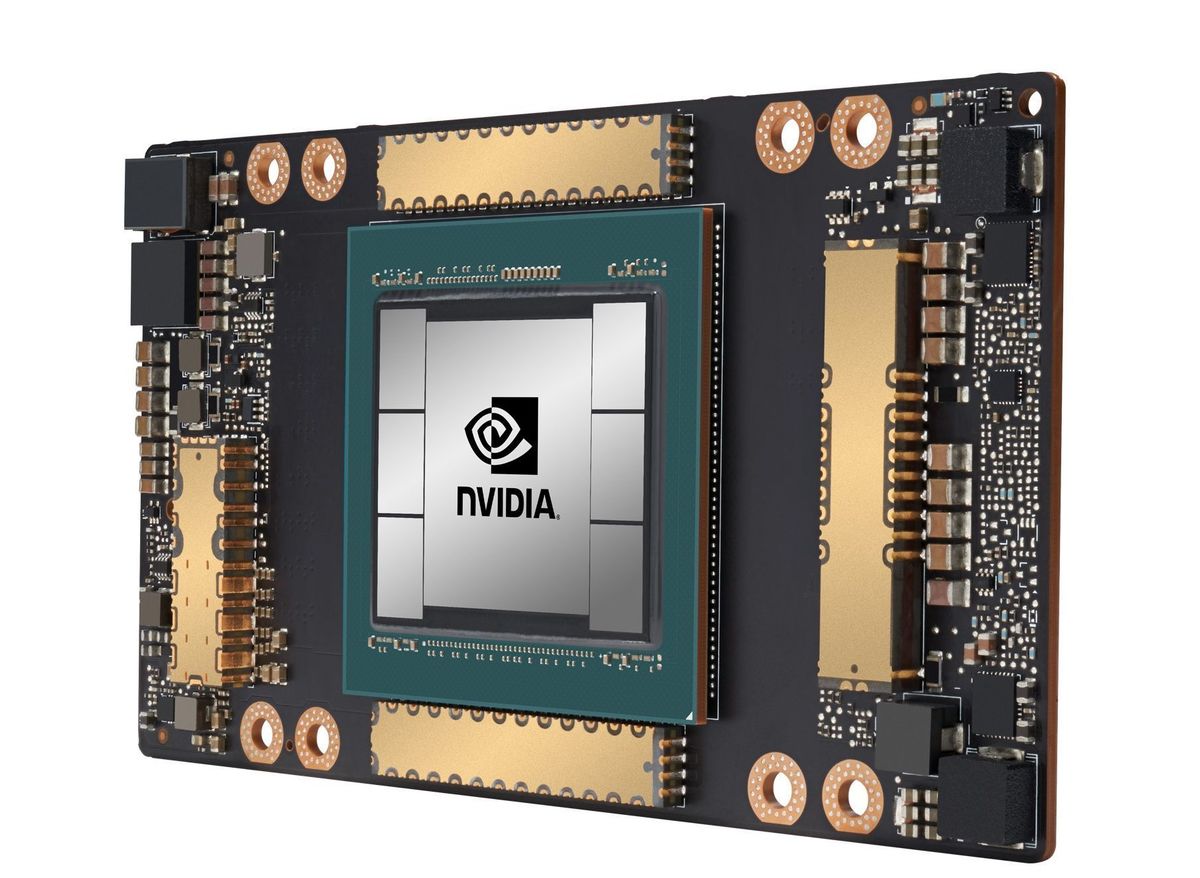

Amazon Web Services launches Nvidia Ampere-powered instances

Amazon Web Services (AWS) has announced the general availability of a new GPU-powered instance called Amazon P4d that is based on Nvidia’s new Ampere architecture, and the two firms are making big performance claims.

AWS has offered GPU-powered instances for a decade now, the most current generation called P3. AWS and Nvidia are both claiming that P4d instances offer three times faster performance, up to 60% lower cost, and 2.5 times more GPU memory for machine learning training and high-performance computing workloads when compared to P3 instances.

The instances reduce the time to train machine-learning models by up to three times with FP16 and up to six times with TF32 compared to the default FP32 precision, according to Nvidia, but can also enable training larger, more complex models.

These are some heavyweight instances, too. P4d instances with eight Nvidia A100 GPUs are capable of up to 2.5 petaflops of mixed-precision performance and 320GB of high-bandwidth GPU memory in one EC2 instance. AWS said P4d instances are the first to offer 400 Gbps network bandwidth with Elastic Fabric Adapter (EFA) and Nvidia GPUDirect RDMA network interfaces to enable direct communication between GPUs across servers for lower latency and higher scaling efficiency.

Each P4d instance also offers 96 Intel Xeon Scalable (Cascade Lake) vCPUs, 1.1TB of system memory, and 8TB of local NVMe storage to reduce single-node training times. By more than doubling the performance of previous generation of P3 instances, P4d instances can lower the cost to train machine learning models by up to 60%.

“As data becomes more abundant, customers are training models with millions and sometimes billions of parameters, like those used for natural language processing for document summarization and question answering, object detection and classification for autonomous vehicles, image classification for large-scale content moderation, recommendation engines for e-commerce websites, and ranking algorithms for intelligent search engines—all of which require increasing network throughput and GPU memory,” AWS said in a statement.

The company said customers can run P4d instances with AWS Deep Learning Containers with libraries for Amazon Elastic Kubernetes Service (Amazon EKS) or Amazon Elastic Container Service (Amazon ECS). For a more fully managed experience, customers can use P4d instances via Amazon SageMaker, designed to help developers and data scientists to build, train and deploy ML models quickly.

HPC customers can leverage AWS Batch and AWS ParallelCluster with P4d instances to help orchestrate jobs and clusters. P4d instances support all ML learning frameworks, including TensorFlow, PyTorch and Apache MXNet, giving customers the flexibility to choose their preferred framework.

P4d instances are available in US East (N. Virginia) and US West (Oregon) regions, with availability planned for additional regions soon. Pricing for the AWS instance starts at $32.77 per hour but goes down to $19.22/hr for a one-year reserved instance and $11.57 for three years.

Copyright © 2020 IDG Communications, Inc.